2025

Show or Tell? Modeling the evolution of request-making in Human-LLM conversations

Shengqi Zhu, Jeffrey M. Rzeszotarski, David Mimno

Findings of the European Chapter of the Association for Computational Linguistics (EACL), 2026

We set up a brand new framework to examine the language(expressions) of LLM users apart from the specific task content, modeling how people contextualize their requests within the conversational format. From there, we study the diachronic evolution of user behaviors through text, a novel and crucial indicator of human-LLM interactions.

Navigating the Fog: How University Students Recalibrate Sensemaking Practices to Address Plausible Falsehoods in LLM Outputs

Chao Zhang, Shengqi Zhu, Xinyu Yang, Yu-Chia Tseng, Shenrong Jiang, Jeffrey M. Rzeszotarski

Proceedings of the 7th ACM Conference on Conversational User Interfaces (CUI), 2025

Students, as a representative group of LLM users, work with these chat interfaces for their daily study, which requires factual accuracy. We study how they make sense of the textual input/outputs with plausible falsehood in real time, identifying patterns and drawing connections with the classic sensemaking model.

Data Paradigms in the Era of LLMs: On the Opportunities and Challenges of Qualitative Data in the WILD

Shengqi Zhu, Jeffrey M. Rzeszotarski, David Mimno

Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems (CHI LBW), 2025

We discuss the current and future affordances of using large-scale in-the-wild user activities as a source of qualitative user data, and highlight the major challenges remaining -- finer-grain control and more ethical data practices.

What We Talk About When We Talk About LMs: Implicit Paradigm Shifts and the Ship of Language Models

Shengqi Zhu, Jeffrey M. Rzeszotarski

Proceedings of the Nations of the Americas Chapter of the Association for Computational Linguistics (NAACL), 2025

"Language models" is an evergreen and viral scientific term. What exact models have we used it to refer to? What are the scientific implications for the same term to mean "BERT/GPT-2" in 2019 but entirely different things now? Inspired by the Ship of Theseus, our work studies this Ship of LMs in detail. (image source: SRF Kultur Sternstunden)

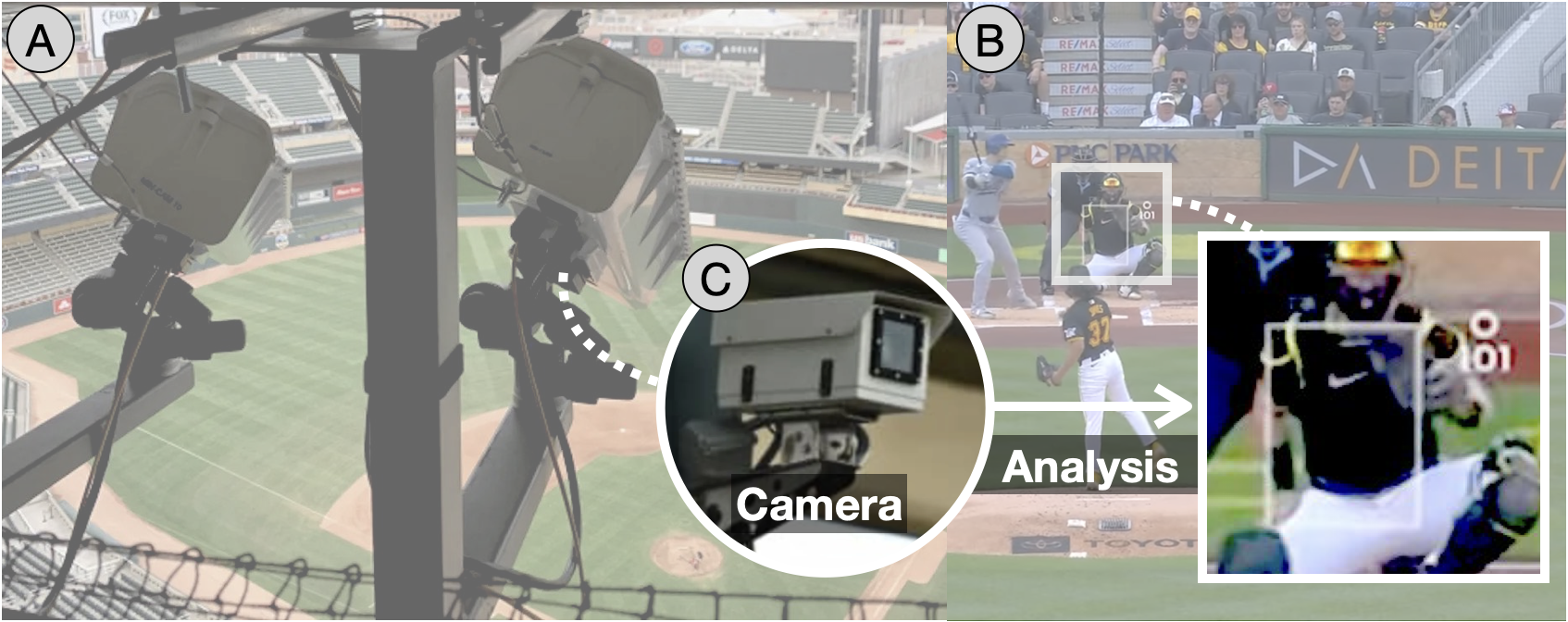

Million Eyes on the “Robot Umps”: The Case for Studying Sports in HRI Through Baseball

Project led by Waki Kamino and Andrea Wen-Yi Wang; served as one of the 23 sub-section authors.

the 20th ACM/IEEE International Conference on Human-Robot Interaction (HRI), 2025

The introduction of "robot umpires" is a classic and controversial example of adding automation to high-stakes scenarios. We explore how this case can inspire research, including in crowd work and NLP under ambiguity.

2024

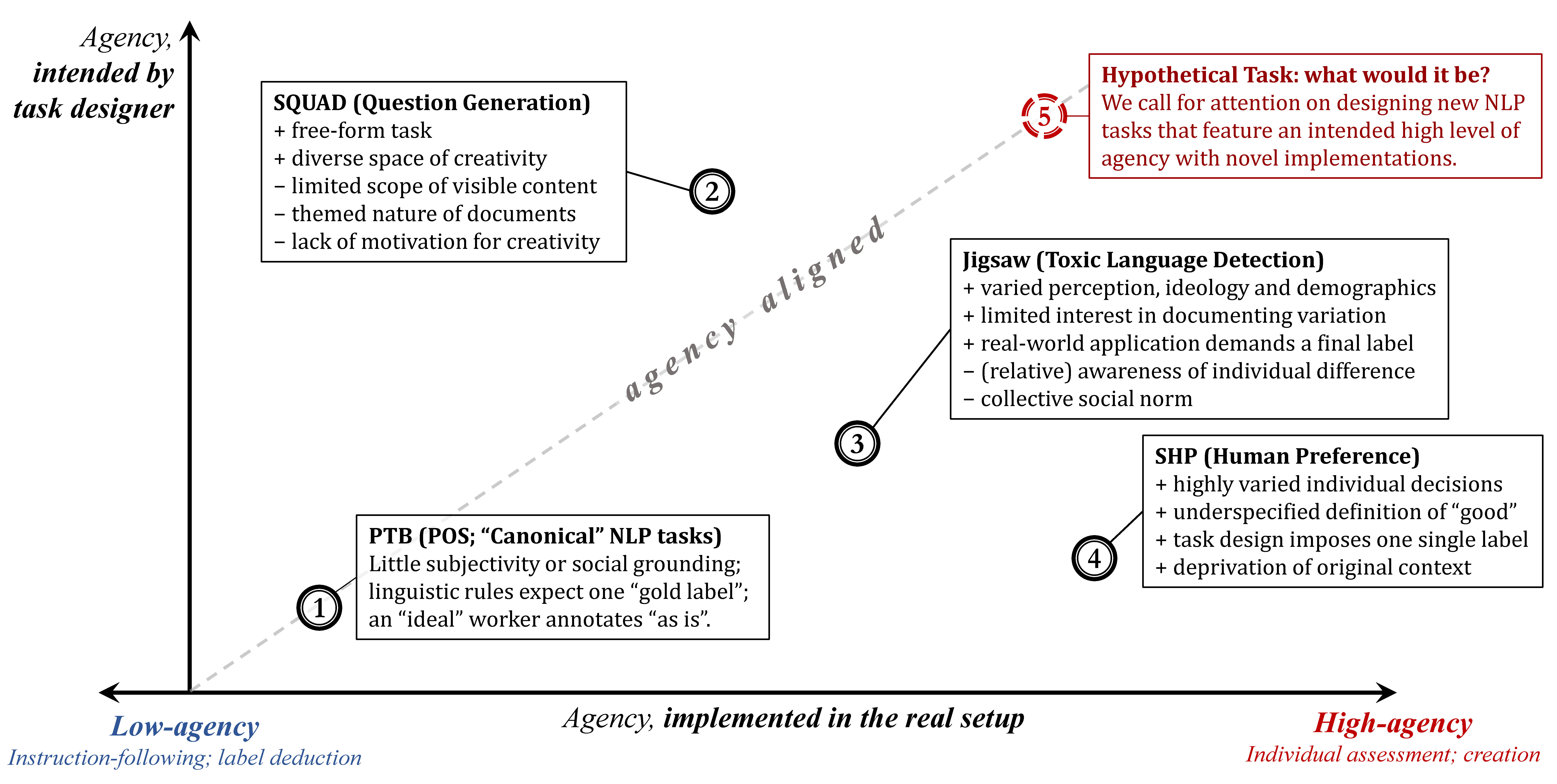

“Get Their Hands Dirty, Not Mine”: On Researcher-Annotator Collaboration and the Agency of Annotators

Shengqi Zhu, Jeffrey M. Rzeszotarski

Findings of the Association for Computational Linguistics (ACL Findings), 2024

This opinion piece discusses the role of researchers in collaboration with annotators. We call for a new language of annotator agency in task design that considers how much individual assessment and creation are incororated.

2023 and Earlier

Prior to Cornell, I have mainly published with my long-time mentor and collaborator, Quzhe Huang. Many of my explorations during this period have fundamentally inspired my thoughts on NLP and language data in the real world, e.g., the application of noisy annotations, and the compromised effectiveness & problematic setups of NLP tasks under practical constraints.

Please see my Google Scholar profile for the full list of my earlier work published at ACL 2021/2022/2023.